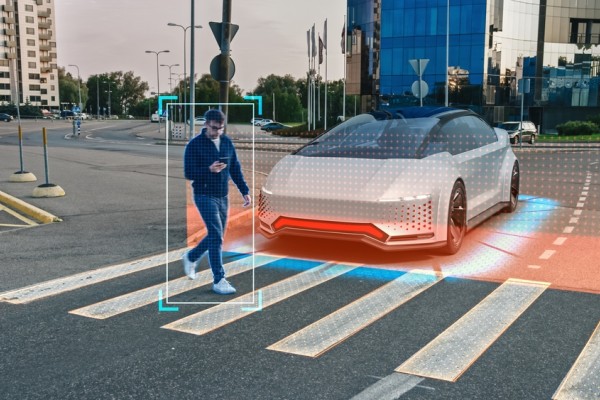

A recent study conducted by researchers at King's College in London and Peking University in China has found that self-driving vehicle software designed to detect pedestrians cannot accurately identify dark-skinned individuals as often as those who are light-skinned. The researchers tested eight AI-based pedestrian detectors used by self-driving car manufacturers and discovered a 7.5% disparity in accuracy between lighter and darker subjects. The study also revealed that the lighting conditions on the road further diminish the ability to detect darker-skinned pedestrians. Incorrect detection rates of dark-skinned pedestrians rose from 7.14% in the daytime to 9.86% at night.

In addition, the software showed a higher detection rate for adults than children, with adults being correctly identified at a rate 20% higher than children. Jie M. Zhang, one of the researchers who participated in the study, commented that "fairness when it comes to AI is when an AI system treats privileged and under-privileged groups the same, which is not what is happening when it comes to autonomous vehicles."

What is this page?

You are reading a summary article on the Privacy Newsfeed, a free resource for DPOs and other professionals with privacy or data protection responsibilities helping them stay informed of industry news all in one place. The information here is a brief snippet relating to a single piece of original content or several articles about a common topic or thread. The main contributor is listed in the top left-hand corner, just beneath the article title.

The Privacy Newsfeed monitors over 300 global publications, of which more than 5,750 summary articles have been posted to the online archive dating back to the beginning of 2020. A weekly roundup is available by email every Friday.